Flamedisx tutorial

Flamedisx is a package for inference on Liquid Xenon TPC data. This tutorial assumes you are familiar with these detectors, and we will not attempt to explain jargon like S1, S2, etc.

0. Setup

To run this notebook, you have two options:

Install flamedisx locally with

pip install flamedisxin a fresh environment. This should install all necessary dependencies (in particular, tensorflow 2).Use Google’s colaboratory, so you do not have to install anything and can use a GPU. Follow the steps below:

Open the notebook in colab using https://colab.research.google.com/github/FlamTeam/flamedisx-notebooks/blob/master/Tutorial.ipynb.

Run just the cell below (starting with

!wget -nc ...)Restart the runtime (Runtime -> Restart runtime).

(Optional: make sure you are using a GPU, using Runtime -> Change runtime type)

Run the notebook.

[27]:

!wget -nc https://raw.githubusercontent.com/FlamTeam/flamedisx-notebooks/master/_if_on_colab_setup_flamedisx.ipynb

%run _if_on_colab_setup_flamedisx.ipynb

File ‘_if_on_colab_setup_flamedisx.ipynb’ already there; not retrieving.

Flamedisx is installed :-)

[2]:

import numpy as np

import matplotlib

import matplotlib.pyplot as plt

import flamedisx as fd

import tensorflow as tf

1. Simulation

Before we can do anything, we have to load some data. If you don’t have a LXe TPC handy, flamedisx can simulate some data for you.

[3]:

df = fd.ERSource().simulate(1000)

df.head()

[3]:

| r | theta | z | x | y | drift_time | event_time | energy | p_accepted | quanta_produced | p_el_mean | p_el_fluct | p_el_actual | electrons_produced | photons_produced | photons_detected | photoelectrons_detected | s1 | electrons_detected | s2 | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 27.128980 | 0.331562 | -0.764587 | 25.651401 | 8.831037 | 5727.240006 | 1.598717e+18 | 9.549549 | 1.0 | 697 | 0.354844 | 0.040858 | 0.321250 | 226 | 471 | 40 | 51 | 56.370795 | 218 | 4461.906705 |

| 1 | 27.264198 | 6.123424 | -15.258556 | 26.916998 | -4.337246 | 114296.298363 | 1.597546e+18 | 9.519519 | 1.0 | 694 | 0.355339 | 0.040855 | 0.354107 | 250 | 444 | 40 | 53 | 47.949693 | 188 | 3790.083776 |

| 2 | 31.145844 | 4.076766 | -84.743000 | -18.490642 | -25.063115 | 634779.025346 | 1.592554e+18 | 6.546546 | 1.0 | 477 | 0.403905 | 0.040152 | 0.438706 | 201 | 276 | 32 | 40 | 36.259102 | 41 | 832.743029 |

| 3 | 28.102007 | 2.638143 | -26.674209 | -24.615202 | 13.557825 | 199806.806110 | 1.576740e+18 | 9.819819 | 1.0 | 716 | 0.351789 | 0.040878 | 0.317993 | 255 | 461 | 46 | 58 | 55.716626 | 160 | 3203.810733 |

| 4 | 26.267384 | 2.533292 | -28.045790 | -21.555543 | 15.011130 | 210080.823113 | 1.570783e+18 | 8.768768 | 1.0 | 640 | 0.364908 | 0.040775 | 0.380722 | 235 | 405 | 37 | 46 | 45.004449 | 148 | 2978.529926 |

Here we used the default ERSource, which describes a flat-spectrum ER source (with some cutoffs) homogeneously distributed in the TPC.

Flamedisx has other Source classes for different populations. You usually want to define your own Sources. We’ll discuss this later below.

As you can see, the data is a Pandas dataframe. The first columns are direct observables, such as the \(x\) position in cm and the \(s1\) size in PE. The other columns are derived observables and truth info from the flamedisx simulator, which we can ignore for now.

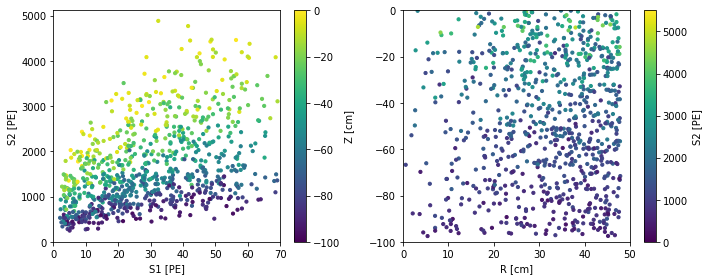

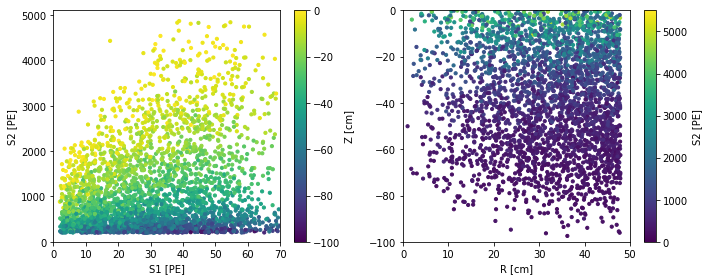

Let’s plot the data:

[4]:

def scatter_2x2d(df, s=10, color_by=None, color_label=None, vmin=None, vmax=None, **kwargs):

f, axes = plt.subplots(1, 2, figsize=(10, 4))

if color_by is None:

c = df['z']

vmin, vmax = -100, 0

else:

c = color_by

plt.sca(axes[0])

plt.scatter(df['s1'], df['s2'], s=s, c=c, vmin=vmin, vmax=vmax, **kwargs)

plt.colorbar(label='Z [cm]' if color_by is None else color_label)

plt.xlim(0, 70)

plt.ylim(0, None)

plt.xlabel("S1 [PE]")

plt.ylabel("S2 [PE]")

if color_by is None:

c = df['s2']

vmin, vmax = 0, 5500

else:

c = color_by

plt.sca(axes[1])

plt.scatter(df['r'], df['z'], c=c, s=s, vmin=vmin, vmax=vmax, **kwargs)

plt.colorbar(label='S2 [PE]' if color_by is None else color_label)

plt.xlabel("R [cm]")

plt.xlim(0, 50)

plt.ylim(-100, 0)

plt.tight_layout()

scatter_2x2d(df)

We asked flamedisx for 1000 events, but we actually got back a few less:

[5]:

len(df)

[5]:

836

This is because the Source includes models for some basic cuts. If you’re curious, the default source cuts events with less than 3 photons detected, S1 outside [2, 70] PE, or S2 outside [200, 5000] PE.

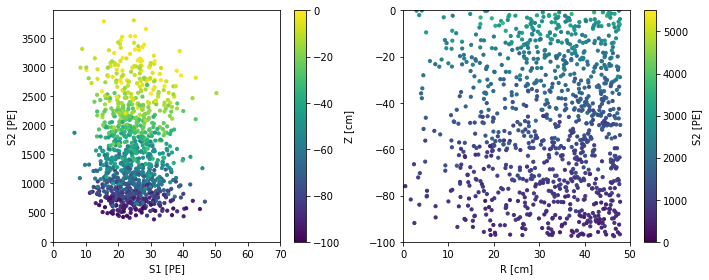

You can also simulate events at specific energies, e.g. at 5 keV:

[6]:

scatter_2x2d(

fd.ERSource().simulate(1000, fix_truth=dict(energy=5.)))

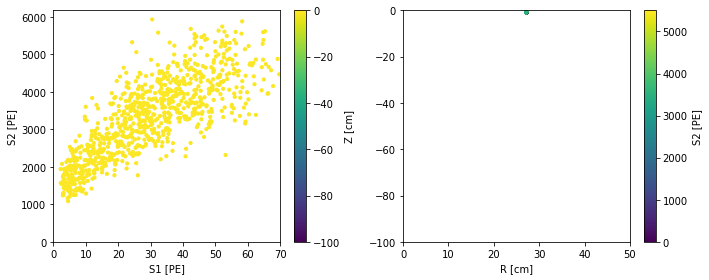

Finally, you can use another dataset to draw the auxiliary observables from – anything besides s1 and s2, i.e. x, y, z, drift time, and (for custom sources) other things you might like. For example, here we simulate events at the same position as the first event in df:

[7]:

scatter_2x2d(

fd.ERSource().simulate(1000, fix_truth=df[:1]))

2. Inference

For this tutorial, let’s just fit something easy: the electron lifetime (elife). To ensure the fits complete in a reasonable time even without a GPU, we simulate just 25 events as the dataset. The ‘true’ elife used to generate this is 452 us. (Flamedisx uses nanoseconds as a time unit by default, so this is 452e3 internally.)

[8]:

smalld = fd.ERSource().simulate(25, full_annotate=True)

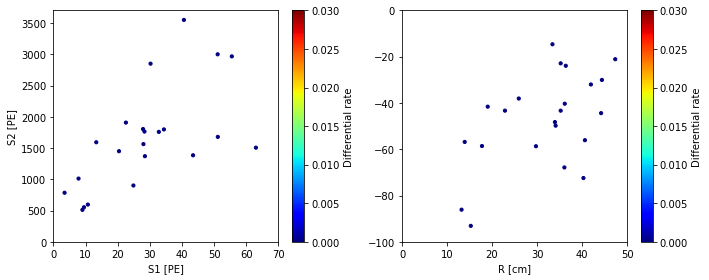

Flamedisx computes the differential rate at each event analytically:

where \(\mu\) is the total expected number of events from the source.

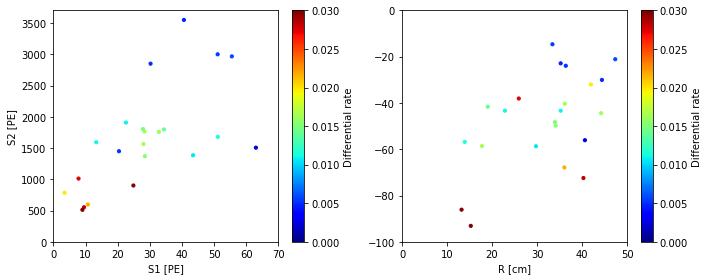

You rarely need to do this yourself, but let’s do it anyway, for two electron lifetimes:

[9]:

source = fd.ERSource(smalld)

for elife_us in (100, 500):

diffrate = source.batched_differential_rate(elife=elife_us * 1e3)

print("Electron lifetime = %d us" % elife_us)

scatter_2x2d(smalld, color_by=diffrate,

vmax=0.03, vmin=0,

color_label='Differential rate', cmap=plt.cm.jet)

plt.show()

100%|██████████| 3/3 [00:04<00:00, 1.44s/it]

Electron lifetime = 100 us

100%|██████████| 3/3 [00:00<00:00, 139.22it/s]

Electron lifetime = 500 us

Notice the differential rates are very low for elife = 100 us. Flamedisx correctly computed that all the events would have to be extreme outliers if elife = 100 us were true. At 500 us, the rates are clearly higher, indicating the events wouldn’t be so unusual at this elife.

Looking directly at differential rates is no way to do inference, you want an extended unbinned log likelihood to do this for you. For a single source, the formula is:

where \(\mu\) is the expected number of events from the source (after all selections). The term inside the log in the sum is the differential rate, which we’ve already seen that flamedisx can compute. For the first term \(\mu\), it still needs simulations at some ” anchor points” – reference parameter space values between it interpolates. This may change soon, see #71.

Flamedisx likelihoods are made with the LogLikelihood class:

[10]:

ll = fd.LogLikelihood(

# Dictionary of sources to use.

# Use multiple sources if your signal/background model has more than one component

sources=dict(er=fd.ERSource),

# Data to use:

data=smalld,

# Parameters to fit, with (lower anchor, upper anchor, number of anchor points):

elife=(300e3, 500e3, 2),

# Allow the absolute ER rate to be fitted as well

free_rates=('er',))

Estimating mus: 100%|██████████| 1/1 [00:00<00:00, 2.81it/s]

You can get the best fit with the bestfit. Guesses are passed with the guess argument; if you pass no guesses, we will guess some parameters for you (the defaults . To convert the bestfit to human-readable form, pass it to summary.

[11]:

%%time

bestfit = ll.bestfit()

ll.summary(bestfit)

er_rate_multiplier: 0.0261 +- 0.00394

elife: 4.59e+05 +- 1.81e+04

Correlation matrix:

er_rate_multiplier elife

er_rate_multiplier 1.000 -0.019

elife -0.019 1.000

CPU times: user 10.4 s, sys: 414 ms, total: 10.8 s

Wall time: 10.4 s

The best-fit value depends on the simulated data, which varies each time you run the notebook. Most of the time, it should be within +- 10 us of the true value, 452 us. This seems pretty amazing for just 25 events, but of course the ER model is here assumed completely known in all other respects, which is unrealistic.

The errors and covariances are computed from the inverse of the Hessian (matrix of second derivatives). These are like the HESSE errors from migrad. You can get the inverse hessian yourself if you want:

[12]:

inv_h = ll.inverse_hessian(bestfit)

If you already computed the Hessian, you can pass it to summary so it doesn’t have to do it again. It will run almost instantly in that case.

[13]:

ll.summary(bestfit, inverse_hessian=inv_h)

er_rate_multiplier: 0.0261 +- 0.00394

elife: 4.59e+05 +- 1.81e+04

Correlation matrix:

er_rate_multiplier elife

er_rate_multiplier 1.000 -0.019

elife -0.019 1.000

Besides fitting, flamedisx can also compute one-dimensional frequentist limits (using ll.limit) and confidence intervals (with ll.interval), e.g.

[14]:

%%time

ll.interval('elife', bestfit=bestfit, confidence_level=0.68)

CPU times: user 3.51 s, sys: 657 ms, total: 4.17 s

Wall time: 2.79 s

[14]:

[435215.3396773964, 487174.84732535813]

The default confidence level is 90%, we requested a 68% interval just for this example. (So don’t be surprised if it didn’t include the true value.)

Computing intervals or limits is more complex and can take longer than fitting. The limits and intervals are computed using Wilk’s theorem, like the MINOS errors from migrad. To do non-asymptotic inference, you can pass in a custom test statistic critical value function with the t_ppf argument.

So far, flamedisx has been using the scipy optimizer (trust-constr) under the hood. We also have other optimizers: * scipy’s TNC. This is activated if you pass use_hessian = False to bestfit or limit. It can be faster (especially on a CPU, it seems) but seems to fail more often. * iminuit: pass optimizer='minuit' * optimizer.bfgs_minimize from tensorflow-probability. Support for this is experimental.

3. Modelling

To do any serious work, you have to define custom sources. This means defining a class which inherits from one of the basic two flamedisx sources (ERSource or NRSource) and overrides one or more of the model functions. Here is the complete list of things you can override for an ER source:

[15]:

sorted(fd.ERSource().model_functions)

[15]:

['double_pe_fraction',

'electron_acceptance',

'electron_detection_eff',

'electron_gain_mean',

'electron_gain_std',

'energy_spectrum_rate_multiplier',

'p_electron',

'p_electron_fluctuation',

'penning_quenching_eff',

'photoelectron_gain_mean',

'photoelectron_gain_std',

'photon_acceptance',

'photon_detection_eff',

'reconstruction_bias_s1',

'reconstruction_bias_s2',

's1_acceptance',

's2_acceptance',

'work']

Each of these can be specified as either a constant or a function. The function can take any observable (such as x or drift_time) as a positional argument and any parameters (something you can fit, e.g. elife) as keyword arguments.

Here’s an example: a source that parametrizes the loss of electrons during drift via the electron absorption_length (in cm) rather than elife (in ns):

[16]:

import tensorflow as tf

class CustomERSource(fd.ERSource):

# staticmethod just means: don't take self as an argument

@staticmethod

def electron_detection_eff(z, absorption_length=60, extraction_efficiency=0.96):

# Note z is negative, and in cm

return extraction_efficiency * tf.exp(z / absorption_length)

Note that we have to use tensorflow inside the model functions. If you keep it (very) simple, you can just replace np -> tf. Or, of course, you can learn tensorflow.

We can fit this as before:

[17]:

ll2 = fd.LogLikelihood(

sources=dict(er=CustomERSource),

data=smalld,

absorption_length=(50., 70., 2),

free_rates=('er',))

Estimating mus: 100%|██████████| 1/1 [00:00<00:00, 2.87it/s]

[18]:

%%time

bf2 = ll2.bestfit(guess=dict(er_rate_multiplier=0.02,

absorption_length=62.))

ll2.summary(bf2)

er_rate_multiplier: 0.026 +- 0.00392

absorption_length: 61.7 +- 2.43

Correlation matrix:

er_rate_multiplier absorption_length

er_rate_multiplier 1.000 -0.004

absorption_length -0.004 1.000

CPU times: user 7.37 s, sys: 327 ms, total: 7.7 s

Wall time: 7.3 s

The result will usually be around 60 cm, which would correspond to our 452 us default elife:

[19]:

452e3 * fd.ERSource().drift_velocity

[19]:

60.342

Remember we’re fitting a very small dataset here, so don’t expect a great result. To get a 90% CL, do

ll2.interval('absorption_length', bestfit=bf2)

4. Modelling 2

Let’s try to fit a more complex part of the ER model. In particular, let’s find p_electron, the fraction of detected quanta that are electrons as a function of energy. This is equivalent to the charge yield Q_y (given a work function), and encodes all effects of exciton/ion splitting and recombination.

Here’s a source implementation that achieves this:

[20]:

class MyERSource(fd.ERSource):

@staticmethod

def p_electron(nq, a=0., b=-0.4, c=0.40, nq0=365.):

x = fd.tf_log10(nq / nq0 + 1e-9)

x = tf.dtypes.cast(x, dtype=fd.float_type())

return fd.safe_p(a * x**2 + b * x + c)

Note: * The p_electron takes an additional argument nq, the number of produced quanta. This is not an observable, but a ‘hidden variable’ of the process, just like e.g. energy or the number of produced photons. In flamedisx you have complete freedom to make any model function depend on any observable you like, but you cannot add dependencies on hidden variables. Such changes would require changes in the flamedisx core code. * fd.tf_log10 is a shortcut to tensorflow implementation of

log10 (the base-10 logarithm) * fd.safe_p clips the values to just above 0 and just below 1.

Let’s fit it, along with a few other parameters that could be a cause for uncertainty such as elife and g2:

[21]:

ll3 = fd.LogLikelihood(

sources=dict(er=MyERSource),

data=smalld,

a=(-0.5, 0.5, 2),

b=(-0.5, -0.2, 2),

c=(0.35, 0.45, 2),

elife=(400e3, 500e3, 2),

g2=(18., 22., 2),

free_rates=('er',))

Estimating mus: 100%|██████████| 5/5 [00:01<00:00, 2.85it/s]

Notice the mu estimation took a bit longer, because we have more parameters to fit. However, this scales linearly with the number of parameters and anchor points. This is because we assume the effects of each parameter (except the rate multipliers) on \(\mu\) are small, so we can add them linearly. For most parameters this is quite accurate, especially if you have a decent amount of data.

[22]:

%%time

bf3 = ll3.bestfit()

ll3.summary(bf3)

/content/flamedisx/flamedisx/inference.py:249: OptimizerWarning: Optimizer requested likelihood at c = 0.4576606443048107, which is outside the bounds (0.35, 0.45).

OptimizerWarning)

/content/flamedisx/flamedisx/inference.py:249: OptimizerWarning: Optimizer requested likelihood at c = 0.4612232212816464, which is outside the bounds (0.35, 0.45).

OptimizerWarning)

/content/flamedisx/flamedisx/inference.py:249: OptimizerWarning: Optimizer requested likelihood at c = 0.45830817741414237, which is outside the bounds (0.35, 0.45).

OptimizerWarning)

/content/flamedisx/flamedisx/inference.py:249: OptimizerWarning: Optimizer requested likelihood at c = 0.4533317155772725, which is outside the bounds (0.35, 0.45).

OptimizerWarning)

/content/flamedisx/flamedisx/inference.py:249: OptimizerWarning: Optimizer requested likelihood at c = 0.46194220853236434, which is outside the bounds (0.35, 0.45).

OptimizerWarning)

/content/flamedisx/flamedisx/inference.py:249: OptimizerWarning: Optimizer requested likelihood at c = 0.45267980164655935, which is outside the bounds (0.35, 0.45).

OptimizerWarning)

/content/flamedisx/flamedisx/inference.py:249: OptimizerWarning: Optimizer requested likelihood at c = 0.45194717880572516, which is outside the bounds (0.35, 0.45).

OptimizerWarning)

/content/flamedisx/flamedisx/inference.py:249: OptimizerWarning: Optimizer requested likelihood at c = 0.4501741836896784, which is outside the bounds (0.35, 0.45).

OptimizerWarning)

/content/flamedisx/flamedisx/inference.py:249: OptimizerWarning: Optimizer requested likelihood at c = 0.4504075577538713, which is outside the bounds (0.35, 0.45).

OptimizerWarning)

/content/flamedisx/flamedisx/inference.py:249: OptimizerWarning: Optimizer requested likelihood at c = 0.45024517953489296, which is outside the bounds (0.35, 0.45).

OptimizerWarning)

/content/flamedisx/flamedisx/inference.py:249: OptimizerWarning: Optimizer requested likelihood at c = 0.4501511818569, which is outside the bounds (0.35, 0.45).

OptimizerWarning)

/content/flamedisx/flamedisx/inference.py:249: OptimizerWarning: Optimizer requested likelihood at c = 0.4502972607408098, which is outside the bounds (0.35, 0.45).

OptimizerWarning)

/content/flamedisx/flamedisx/inference.py:249: OptimizerWarning: Optimizer requested likelihood at c = 0.45001870962755, which is outside the bounds (0.35, 0.45).

OptimizerWarning)

/content/flamedisx/flamedisx/inference.py:249: OptimizerWarning: Optimizer requested likelihood at c = 0.46608900249980534, which is outside the bounds (0.35, 0.45).

OptimizerWarning)

/content/flamedisx/flamedisx/inference.py:249: OptimizerWarning: Optimizer requested likelihood at c = 0.45204670432341737, which is outside the bounds (0.35, 0.45).

OptimizerWarning)

/content/flamedisx/flamedisx/inference.py:249: OptimizerWarning: Optimizer requested likelihood at c = 0.45030800874775456, which is outside the bounds (0.35, 0.45).

OptimizerWarning)

/content/flamedisx/flamedisx/inference.py:249: OptimizerWarning: Optimizer requested likelihood at c = 0.4500196095323822, which is outside the bounds (0.35, 0.45).

OptimizerWarning)

/content/flamedisx/flamedisx/inference.py:249: OptimizerWarning: Optimizer requested likelihood at c = 0.4662055499693639, which is outside the bounds (0.35, 0.45).

OptimizerWarning)

/content/flamedisx/flamedisx/inference.py:249: OptimizerWarning: Optimizer requested likelihood at c = 0.45204692154834786, which is outside the bounds (0.35, 0.45).

OptimizerWarning)

/content/flamedisx/flamedisx/inference.py:249: OptimizerWarning: Optimizer requested likelihood at c = 0.45030836563317445, which is outside the bounds (0.35, 0.45).

OptimizerWarning)

/content/flamedisx/flamedisx/inference.py:249: OptimizerWarning: Optimizer requested likelihood at c = 0.4500196809458382, which is outside the bounds (0.35, 0.45).

OptimizerWarning)

/content/flamedisx/flamedisx/inference.py:249: OptimizerWarning: Optimizer requested likelihood at c = 0.4662289220777778, which is outside the bounds (0.35, 0.45).

OptimizerWarning)

/content/flamedisx/flamedisx/inference.py:249: OptimizerWarning: Optimizer requested likelihood at c = 0.45204696220820545, which is outside the bounds (0.35, 0.45).

OptimizerWarning)

/content/flamedisx/flamedisx/inference.py:249: OptimizerWarning: Optimizer requested likelihood at c = 0.4503084354643344, which is outside the bounds (0.35, 0.45).

OptimizerWarning)

/content/flamedisx/flamedisx/inference.py:249: OptimizerWarning: Optimizer requested likelihood at c = 0.4500196950630045, which is outside the bounds (0.35, 0.45).

OptimizerWarning)

/content/flamedisx/flamedisx/inference.py:249: OptimizerWarning: Optimizer requested likelihood at c = 0.46623359901358163, which is outside the bounds (0.35, 0.45).

OptimizerWarning)

/content/flamedisx/flamedisx/inference.py:249: OptimizerWarning: Optimizer requested likelihood at c = 0.4520469702305324, which is outside the bounds (0.35, 0.45).

OptimizerWarning)

/content/flamedisx/flamedisx/inference.py:249: OptimizerWarning: Optimizer requested likelihood at c = 0.45030844936910575, which is outside the bounds (0.35, 0.45).

OptimizerWarning)

/content/flamedisx/flamedisx/inference.py:249: OptimizerWarning: Optimizer requested likelihood at c = 0.4500196978798453, which is outside the bounds (0.35, 0.45).

OptimizerWarning)

/content/flamedisx/flamedisx/inference.py:249: OptimizerWarning: Optimizer requested likelihood at c = 0.46623453450133095, which is outside the bounds (0.35, 0.45).

OptimizerWarning)

/content/flamedisx/flamedisx/inference.py:249: OptimizerWarning: Optimizer requested likelihood at c = 0.4520469718306261, which is outside the bounds (0.35, 0.45).

OptimizerWarning)

/content/flamedisx/flamedisx/inference.py:249: OptimizerWarning: Optimizer requested likelihood at c = 0.4503084521476045, which is outside the bounds (0.35, 0.45).

OptimizerWarning)

/content/flamedisx/flamedisx/inference.py:249: OptimizerWarning: Optimizer requested likelihood at c = 0.45001969844295, which is outside the bounds (0.35, 0.45).

OptimizerWarning)

/content/flamedisx/flamedisx/inference.py:249: OptimizerWarning: Optimizer requested likelihood at c = 0.46623472160298024, which is outside the bounds (0.35, 0.45).

OptimizerWarning)

/content/flamedisx/flamedisx/inference.py:249: OptimizerWarning: Optimizer requested likelihood at c = 0.45204697215047007, which is outside the bounds (0.35, 0.45).

OptimizerWarning)

/content/flamedisx/flamedisx/inference.py:249: OptimizerWarning: Optimizer requested likelihood at c = 0.4503084527032061, which is outside the bounds (0.35, 0.45).

OptimizerWarning)

/content/flamedisx/flamedisx/inference.py:249: OptimizerWarning: Optimizer requested likelihood at c = 0.4500196985555604, which is outside the bounds (0.35, 0.45).

OptimizerWarning)

/content/flamedisx/flamedisx/inference.py:249: OptimizerWarning: Optimizer requested likelihood at c = 0.4662347590233173, which is outside the bounds (0.35, 0.45).

OptimizerWarning)

/content/flamedisx/flamedisx/inference.py:249: OptimizerWarning: Optimizer requested likelihood at c = 0.4520469722144319, which is outside the bounds (0.35, 0.45).

OptimizerWarning)

/content/flamedisx/flamedisx/inference.py:249: OptimizerWarning: Optimizer requested likelihood at c = 0.45030845281432247, which is outside the bounds (0.35, 0.45).

OptimizerWarning)

/content/flamedisx/flamedisx/inference.py:249: OptimizerWarning: Optimizer requested likelihood at c = 0.45001969857808205, which is outside the bounds (0.35, 0.45).

OptimizerWarning)

/content/flamedisx/flamedisx/inference.py:249: OptimizerWarning: Optimizer requested likelihood at c = 0.46623476650747797, which is outside the bounds (0.35, 0.45).

OptimizerWarning)

/content/flamedisx/flamedisx/inference.py:249: OptimizerWarning: Optimizer requested likelihood at c = 0.45204697222722395, which is outside the bounds (0.35, 0.45).

OptimizerWarning)

/content/flamedisx/flamedisx/inference.py:249: OptimizerWarning: Optimizer requested likelihood at c = 0.4503084528365456, which is outside the bounds (0.35, 0.45).

OptimizerWarning)

/content/flamedisx/flamedisx/inference.py:249: OptimizerWarning: Optimizer requested likelihood at c = 0.45001969858258634, which is outside the bounds (0.35, 0.45).

OptimizerWarning)

/content/flamedisx/flamedisx/inference.py:249: OptimizerWarning: Optimizer requested likelihood at c = 0.46623476800428776, which is outside the bounds (0.35, 0.45).

OptimizerWarning)

/content/flamedisx/flamedisx/inference.py:249: OptimizerWarning: Optimizer requested likelihood at c = 0.45204697222978235, which is outside the bounds (0.35, 0.45).

OptimizerWarning)

/content/flamedisx/flamedisx/inference.py:249: OptimizerWarning: Optimizer requested likelihood at c = 0.4503084528409902, which is outside the bounds (0.35, 0.45).

OptimizerWarning)

/content/flamedisx/flamedisx/inference.py:249: OptimizerWarning: Optimizer requested likelihood at c = 0.4500196985834872, which is outside the bounds (0.35, 0.45).

OptimizerWarning)

/content/flamedisx/flamedisx/inference.py:249: OptimizerWarning: Optimizer requested likelihood at c = 0.46623476830369165, which is outside the bounds (0.35, 0.45).

OptimizerWarning)

/content/flamedisx/flamedisx/inference.py:249: OptimizerWarning: Optimizer requested likelihood at c = 0.45204697223029405, which is outside the bounds (0.35, 0.45).

OptimizerWarning)

/content/flamedisx/flamedisx/inference.py:249: OptimizerWarning: Optimizer requested likelihood at c = 0.45030845284187915, which is outside the bounds (0.35, 0.45).

OptimizerWarning)

/content/flamedisx/flamedisx/inference.py:249: OptimizerWarning: Optimizer requested likelihood at c = 0.4500196985836674, which is outside the bounds (0.35, 0.45).

OptimizerWarning)

/content/flamedisx/flamedisx/inference.py:249: OptimizerWarning: Optimizer requested likelihood at c = 0.4662347683634832, which is outside the bounds (0.35, 0.45).

OptimizerWarning)

/content/flamedisx/flamedisx/inference.py:249: OptimizerWarning: Optimizer requested likelihood at c = 0.45204697223039636, which is outside the bounds (0.35, 0.45).

OptimizerWarning)

/content/flamedisx/flamedisx/inference.py:249: OptimizerWarning: Optimizer requested likelihood at c = 0.450308452842057, which is outside the bounds (0.35, 0.45).

OptimizerWarning)

/content/flamedisx/flamedisx/inference.py:249: OptimizerWarning: Optimizer requested likelihood at c = 0.4500196985837034, which is outside the bounds (0.35, 0.45).

OptimizerWarning)

er_rate_multiplier: 0.0279 +- 0.00421

a: -0.0901 +- 0.227

b: -0.432 +- 0.0622

c: 0.45 +- 0.0283

elife: 4.44e+05 +- 3.77e+04

g2: 21.5 +- 0.733

Correlation matrix:

er_rate_multiplier a b c elife g2

er_rate_multiplier 1.000 -0.002 -0.071 0.037 -0.032 0.005

a -0.002 1.000 0.471 -0.539 0.185 -0.035

b -0.071 0.471 1.000 -0.546 0.300 -0.024

c 0.037 -0.539 -0.546 1.000 -0.744 -0.251

elife -0.032 0.185 0.300 -0.744 1.000 -0.151

g2 0.005 -0.035 -0.024 -0.251 -0.151 1.000

CPU times: user 16.7 s, sys: 1.33 s, total: 18 s

Wall time: 15.4 s

This fit took longer, because it had a 6D rather than 2D parameter space to explore. Things could have been much, much worse. Since our likelihood is differentiable, the minimizer knew the gradient, which told it in which direction to go – quite useful if you are lost in six dimensions!

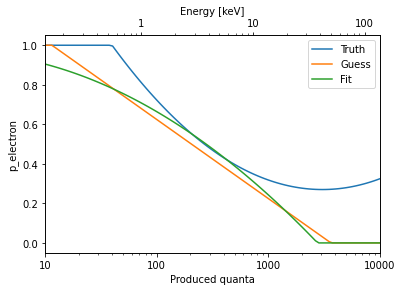

Finally, let’s compare the p_electron function we ust fitted with the ground truth – that is, the ones the data was simulated with:

[23]:

def plot_nq(s_class=fd.ERSource, plot_kwargs=None, twin=True,

# Eat params we don't need...

er_rate_multiplier=None, elife=None, g2=None,

**params):

if plot_kwargs is None:

plot_kwargs = dict()

nq = np.logspace(1, 4, 100)

plt.plot(nq, s_class.p_electron(nq, **params), **plot_kwargs)

plt.xlabel('Produced quanta')

plt.ylabel('p_electron')

plt.xlim(10, 1e4)

plt.xscale('log')

ax = plt.gca()

if twin:

ax2 = plt.twiny()

ax2.set_xscale('log')

ax2.set_xlim(*np.array(ax.get_xlim()) * fd.ERSource().work)

ax2.set_xlabel("Energy [keV]")

for x in [ax] + ([ax2] if twin else []):

x.xaxis.set_major_formatter(matplotlib.ticker.ScalarFormatter())

plt.sca(ax)

plot_nq(plot_kwargs=dict(label='Truth'))

plot_nq(MyERSource, plot_kwargs=dict(label='Guess'), twin=False)

plot_nq(MyERSource, plot_kwargs=dict(label='Fit'), twin=False, **bf3)

plt.legend(loc='upper right')

[23]:

<matplotlib.legend.Legend at 0x7f8c35badb70>

It seems we actually fitted something! The fit is of course pretty bad, especially outside the few-keV range where we have data, because we fitted to a ridiculously small number of events.

5. Why bother with high-dimensional likelihoods?

Let’s draw some mock ER data with a poor electron lifetime of 200 us – you’ll see why in a moment.

[24]:

df = fd.ERSource().simulate(5000, elife=200e3, full_annotate=True)

scatter_2x2d(df)

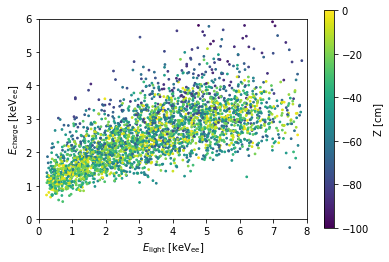

You can see the mean S2 decreases with z, due to the finite electron lifetime in the TPC. Usually, experiments correct for the mean position dependence of their observables. We can define the detector-independent quantities:

with W the liquid xenon work function, and g1 and g2 the detector response per produced photon/electron at the observed (x,y) position. Plotting these, you can see most of the position dependence is gone:

[25]:

def scatter_energy(df, s=3, cbar=True, **kwargs):

plt.scatter(df['e_light_vis'], df['e_charge_vis'],

c=df['z'], vmin=-100, vmax=0,

s=s)

plt.xlabel(r"$E_\mathrm{light}$ [$\mathrm{keV}_\mathrm{ee}$]")

plt.xlim(0, 8)

plt.ylabel(r"$E_\mathrm{charge}$ [$\mathrm{keV}_\mathrm{ee}$]")

plt.ylim(0, 6)

plt.gca().set_aspect('equal')

if cbar:

plt.colorbar(label='Z [cm]', **kwargs)

scatter_energy(df)

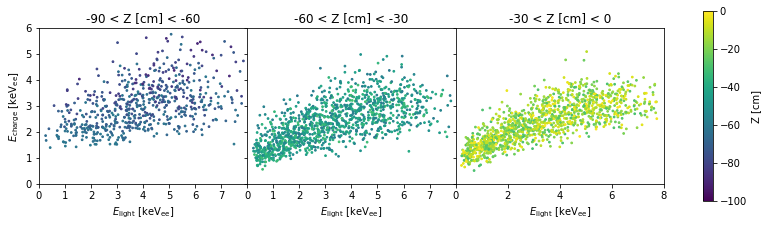

However, there is still a \(z\)-dependent effect: the fluctuation in E_charge is much larger in the bottom of the TPC than the bottom. You can see this more clearly if we split out the different z’s:

[26]:

f, axes = plt.subplots(1, 3, figsize=(14, 3.5), sharey=True)

plt.subplots_adjust(wspace=0)

for i, z_slice in enumerate([(-90, -60), (-60, -30), (-30, 0)]):

plt.sca(axes[i])

scatter_energy(df[(z_slice[0] < df['z']) & (df['z'] < z_slice[1])],

cbar=i == 2, ax=axes)

plt.title("%d < Z [cm] < %d" % z_slice)

if i != 0:

plt.ylabel("")

if i != 2:

plt.xticks(plt.xticks()[0][:-1])

The cause is simply a statistical effect: because the S2s in the bottom of the smaller, E_charge has a larger relative fluctuation. This means ER/NR discrimination at the bottom of the TPC will be worse than at the top.

The g1/g2 correction accounts for the mean position-dependence, but still loses information. This is one of the reasons that higher-dimensional likelihoods can provide a gain in sensitivity.